From that view we'll be creating a SP say GetSalesInfo that is fetching the records.

===================================================

Alter proc demo.GetSalesInfo @ProdCat nvarchar(100)

AS

BEGIN

select h.OrderDate,h.PurchaseOrderNumber,MAX(h.SubTotal) Amount

from SalesLT.SalesOrderHeader h

inner join SalesLT.SalesOrderDetail d

on h.SalesOrderID = d.SalesOrderID

inner join SalesLT.Product p

on d.ProductID = p.ProductID

inner join SalesLT.ProductCategory pc

on pc.ProductCategoryID = p.ProductCategoryID

and pc.Name = @ProdCat

where YEAR(h.OrderDate)= 2008 and h.PurchaseOrderNumber is not null

group by h.OrderDate, h.PurchaseOrderNumber

end

go

=====================================================================

We also need to create a table and sp for logging=====================================================================

create table demo.ETLLog

( id int identity (1,1) primary key,

DateProcessed datetime default(getdate()),

ProductCategory nvarchar(100) not null,

duration int not null)

Create or ALTER proc demo.updateETLLog

@ProdCat nvarchar(100), @duration int

@ProdCat nvarchar(100), @duration int

as

begin

insert into demo.ETLLog(ProductCategory,duration)

values(@ProdCat ,@duration)

values(@ProdCat ,@duration)

end

go

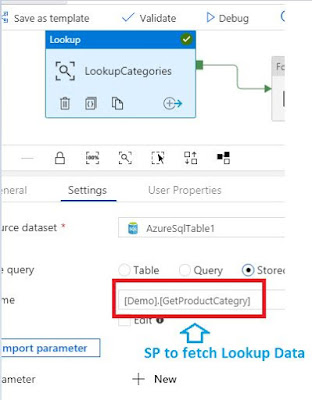

And we require one SP to fetch the lookup data as below

ALTER proc demo.GetProductCategry

AS

BEGIN

select productcategoryid, Name as Category

from SalesLT.ProductCategory

END

In ADF First you need to create a pipeline and then drag

a Lookup from the left pan of the designer.

See the highlighted part for the

Lookup where the category

Lookup where the category

is coming from the SP enabling

looking thru those category via

Foreach loop

To use from the Lookup value

by foreach loop, inside setting

of the foreach loop we need to

use the below formula inside

the setting tab under item.

Use Foreach as sequential mode

@activity('LookupCategories').output.value

if you double click the foreach loop then you'll be redirecting to the below screen

where you need to add a copy control first

The input parameter if the SP to be supplied from the parameter value

@item().Category

@item().Category

Please note Category is one of the output of the SP demo.GetProductCategry

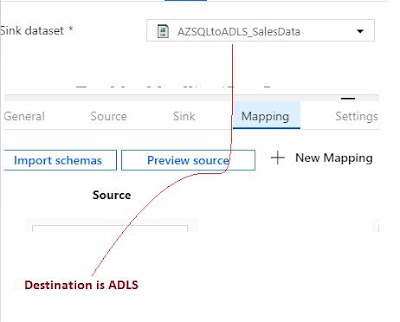

The sink here is destination.

When you'll go to the tab for

any data-set sink or source,

you'll be having two options

Choosing from the existing or

creation of a new.

I created the new data-set and

then I need to create the link

server

One is for source i.e. Azure SQL DB

For that you need to remember

the SQL credential i.e. UID and

the SQL credential i.e. UID and

pwd of Azure SQL.

For ADLS you need to add the ADF

pipeline name inside the access

tab

tab

of ADLS data explorer and use the

manage identity option.

I'm not explaining the process for ADLS security not a great extent.

Anyways if you get any security error while testing connection for

ADLS please use the below link

ADLS please use the below link

For dynamic creation of files based on category inside folder of ADLS

Use the dynamic file creation

@concat('sales_',item().Category,'.txt')